The operational advantage of GDPR requirements

On 28th May 2018, the General Data Protection Regulation (GDPR) changed the data privacy landscape forever. What organisations sometimes missed when the regulation came into force was that GDPR should not have been viewed as a burden or to have been treated as a tick-box exercise.

It was an opportunity to extract mandatory regulatory budget and use it to elevate and transform organisations’ data capabilities. Turning the 4% of the global turnover stick into the carrot to lead the organisations’ data journeys forward.

What GDPR did was usher in a new era for the protection of personal data from organisations misusing it. A regulation that had the teeth to make organisations take pause and assess their management of personal data, its use, access, location and purpose. Unfortunately, although they achieved compliance, it became a tick-box exercise for some organisations: at worst, assembling Excel sheets with locations of data, assigning data owners, Data Protection Impact Assessments (DPIA) scores, etc; at best, their approach was to deliver compliance by combining data governance software with changing policy and legal documents to get the seal of approval from either internal legal or audit teams and/or an external consultancy that they were GDPR-compliant. Neither of these approaches addressed the clear need for an ideological and cultural shift towards data and its management that needs to occur.

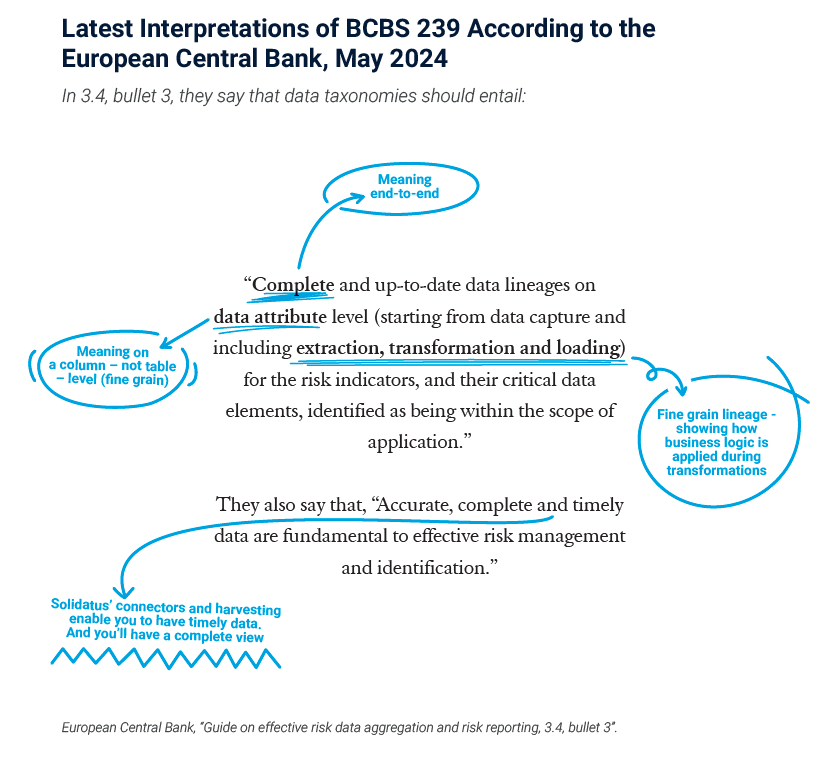

The important point was that with GDPR setting the bar so high, other jurisdictions had no choice but to follow and to raise the bar higher and higher. For organisations with multi-jurisdictional exposure because of the regulatory-isolated, non-re-usable, narrow-focused methodologies employed, the cost and effort required to implement each regulation is similar. So, if it cost $10M to implement GDPR, then it will be a similar cost to implement each of the California Consumer Protection Act (CCPA), Brazilian General Data Protection Law (LGPD), Personal Data Protection Act 2012 (PDPA), New York Privacy Act, India Data Protection, and Malta Data Protection, among others. With thousands of companies implementing all of the same regulations, in the same way, the cost to business innovation is eye-wateringly high at over $10B on GDPR alone. Not to mention the continued additional burden of data protection and governance, with whole departments focused on controlling/limiting access rather than enabling.

The principles of data privacy that GDPR and subsequent regulations demand shouldn’t be considered as a differentiating factor for clients when choosing a provider, it should be the minimum level of expectation. Much the same way that the minimum level of expectation on a physical structure such as a building or bridge is that it will not fail and severely impact its user. Now, civil engineers have had centuries to evolve their discipline into the formalised, well planned, well understood, well documented and well structured endeavour that it is today. Software engineering is in its infancy by comparison, rapidly evolving but lacking much of the formalised, planned, understood, standards and structures that are required to support the principle of Privacy by Default and by Design.

There’s a strong argument that organisations should have taken a more engineered approach to data protection, one that was considered an opportunity to understand an organisation’s data, processes and actors (its people), with each component not viewed in isolation, but as an element of a larger ecosystem. Because in reality, this was an opportunity for re-use, with the continual improvement of data, processes and policies, culminating in a knowledge graph or organisational data blueprint that enables the storage and contextual access of both explicit and tacit knowledge.

For multi-jurisdictional organisations, the impact of having to be compliant with not one complex and onerous privacy regulation but up to one hundred, becomes an innovation and organisational crippling cost. However, all of the privacy regulations contain a significant amount of similarity, and so when addressed by re-use-focused methodology and tooling, the cost of compliance is significantly reduced with each new regulation that is implemented. Only the delta of difference between the regulations needs to be modelled and impact assessed anew. The organisation builds up a cumulative common taxonomy that describes all of the data privacy requirements and their impacts on the business in terms of processes and data.

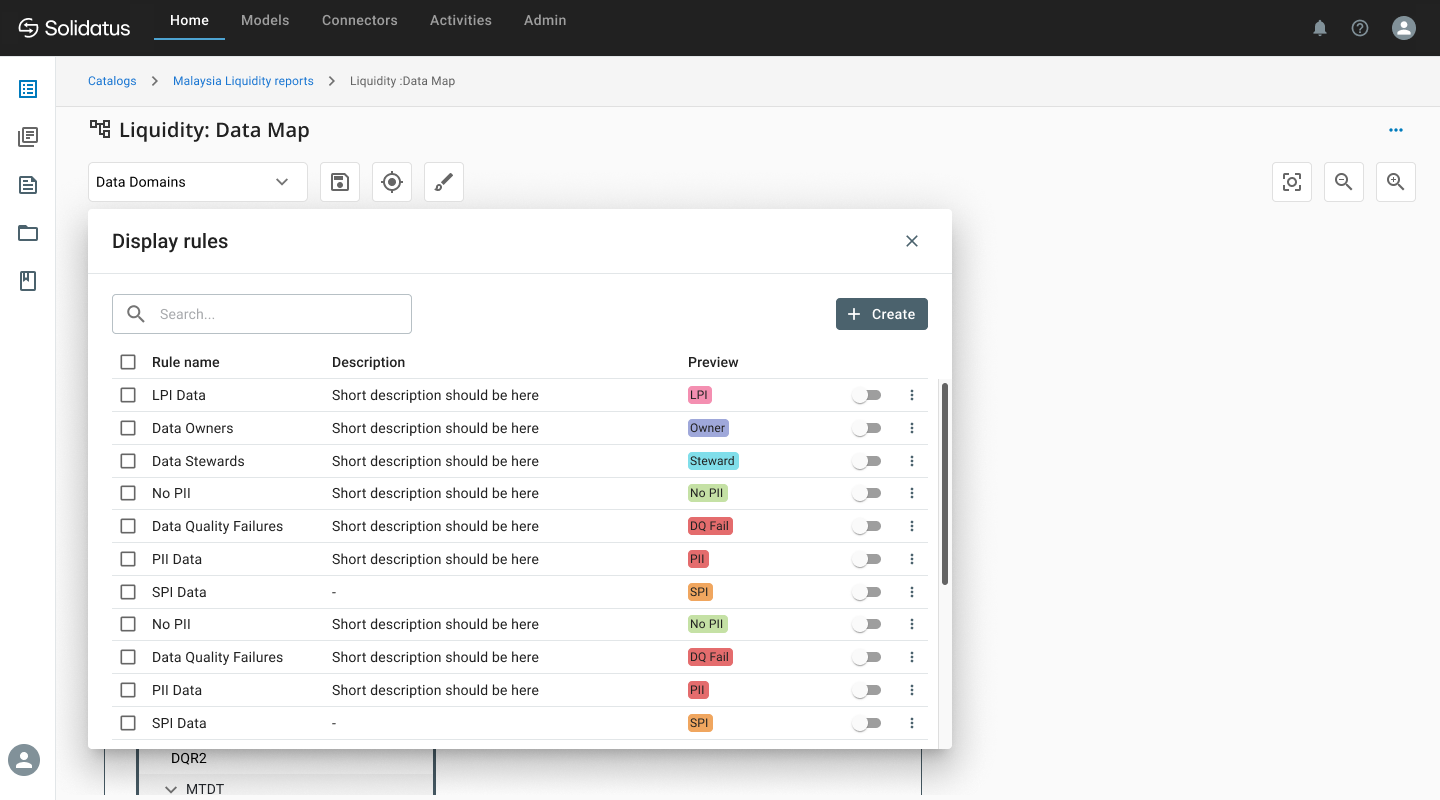

Solidatus provides organisations with a solution that allows you to fundamentally redesign your organisational data culture and capabilities. It provides for the creation of a holistic organisation-wide digital map that details all of the relationships that interact with its data and their impacts – including the physical location of data, its classification, its purpose, its access rights, its retention requirements, its interaction with processes and policies, its quality, and so on. It also enables the internal map to be aligned to the external regulations, allowing users to view connectivity and impact from any focal point and through any lens. If GDPR changes, how will those changes affect my organisation and its compliance? If my processes, systems or policies change, how will that affect my ability to remain compliant? Solidatus implements a methodology that is an evolutionary leap forward in data management, shifting the dial from reactive to proactive. “Planned Change” – checking the plan before removing a wall, updating the plan and removing the wall if it is safe to do so, rather than “Captured Change” – removing a wall and updating the plan, no checks on the impact of that change. All held within a quick and simple to use, extendable, scaleable, audited, governed and versioned graph repository.

If there’s one thing I’ve learned in my 20 years of engineering software and managing data in large heterogeneous, distributed development ecosystems, it’s that technology is rarely the problem, though it’s often blamed for failings. The real challenge we face is understanding and aligning methodologies, capabilities, technology, processes, policies and people in order to build trust in our data. And this is why Solidatus was born.